“If we go in front of a live camera that is using facial recognition to identify and interpret who they’re looking at and compare that to a passport photo, we can realistically and repeatedly cause that kind of targeted misclassification,” said the study’s lead author, Steve Povolny.

How it works

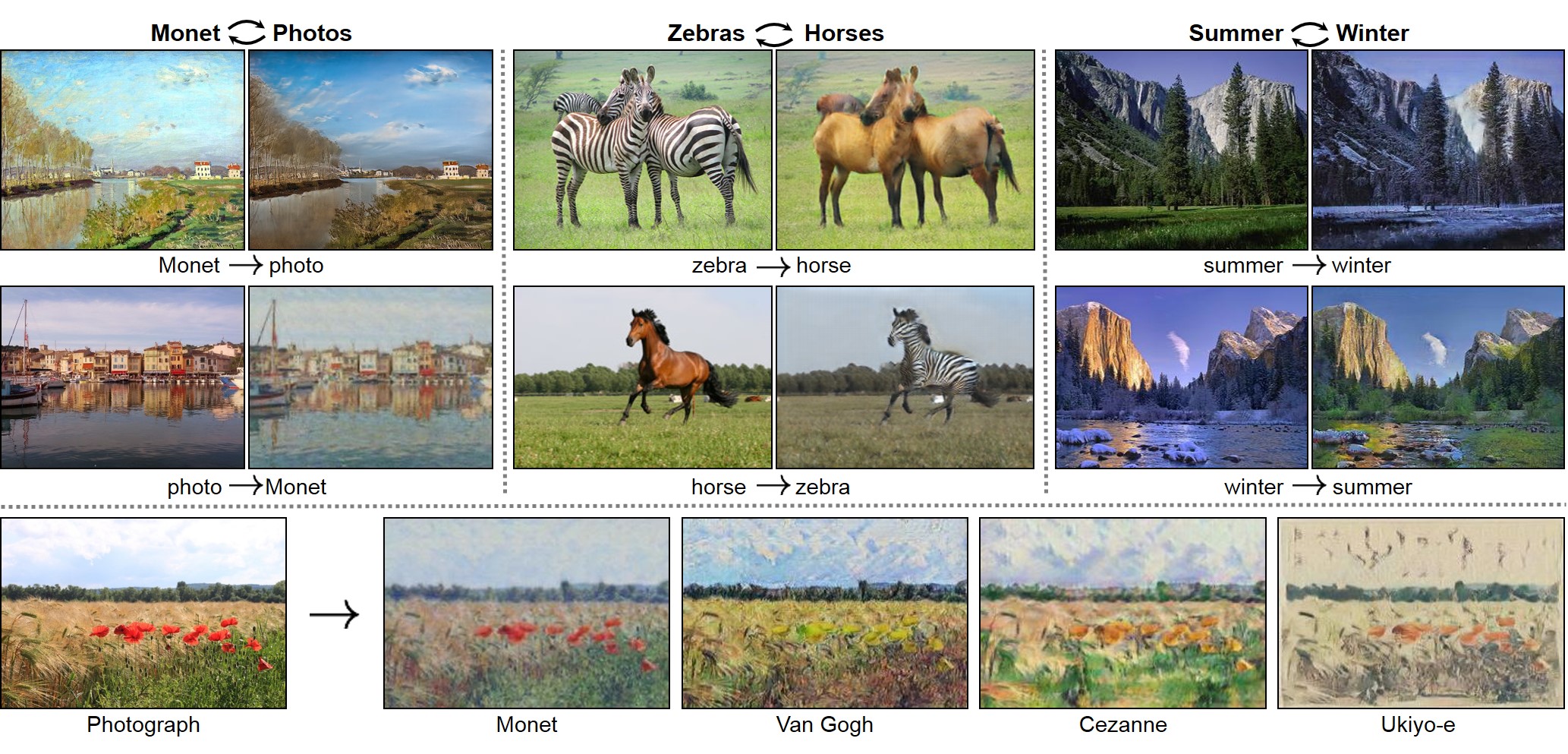

To misdirect the algorithm, the researchers used an image translation algorithm known as CycleGAN, which excels at morphing photographs from one style into another. For example, it can make a photo of a harbor look as if it were painted by Monet, or make a photo of mountains taken in the summer look like it was taken in the winter.

JUN-YAN ZHU AND TAESUNG PARK ET AL.

The McAfee team used 1,500 photos of each of the project’s two leads and fed the images into a CycleGAN to morph them into one another. At the same time, they used the facial recognition algorithm to check the CycleGAN’s generated images to see who it recognized. After generating hundreds of images, the CycleGAN eventually created a faked image that looked like person A to the naked eye but fooled the face recognition into thinking it was person B.

MCAFEE

While the study raises clear concerns about the security of face recognition systems, there are some caveats. First, the researchers didn’t have access to the actual system that airports use to identify passengers and instead approximated it with a state-of-the-art, open-source algorithm. “I think for an attacker that is going to be the hardest part to overcome,” Povolny says, “where [they] don’t have access to the target system.” Nonetheless, given the high similarities across face recognition algorithms, he thinks it’s likely that the attack would work even on the actual airport system.

Second, today such an attack requires lots of time and resources. CycleGANs need powerful computers and expertise to train and execute.