GPT-3 is OpenAI’s latest and largest language AI model, which the San Francisco-based research lab began drip-feeding out in mid-July. In February of last year, OpenAI made headlines with GPT-2, an earlier version of the algorithm, which it announced it would withhold for fear it would be abused. The decision immediately sparked a backlash, as researchers accused the lab of pulling a stunt. By November, the lab had reversed position and released the model, saying it had detected “no strong evidence of misuse so far.”

The lab took a different approach with GPT-3; it neither withheld it nor granted public access. Instead, it gave the algorithm to select researchers who applied for a private beta, with the goal of gathering their feedback and commercializing the technology by the end of the year.

Porr submitted an application. He filled out a form with a simple questionnaire about his intended use. But he also didn’t wait around. After reaching out to several members of the Berkeley AI community, he quickly found a PhD student who already had access. Once the graduate student agreed to collaborate, Porr wrote a small script for him to run. It gave GPT-3 the headline and introduction for a blog post and had it spit out several completed versions. Porr’s first post (the one that charted on Hacker News), and every post after, was a direct copy-and-paste from one of outputs.

“From the time that I thought of the idea and got in contact with the PhD student to me actually creating the blog and the first blog going viral–it took maybe a couple of hours,” he says.

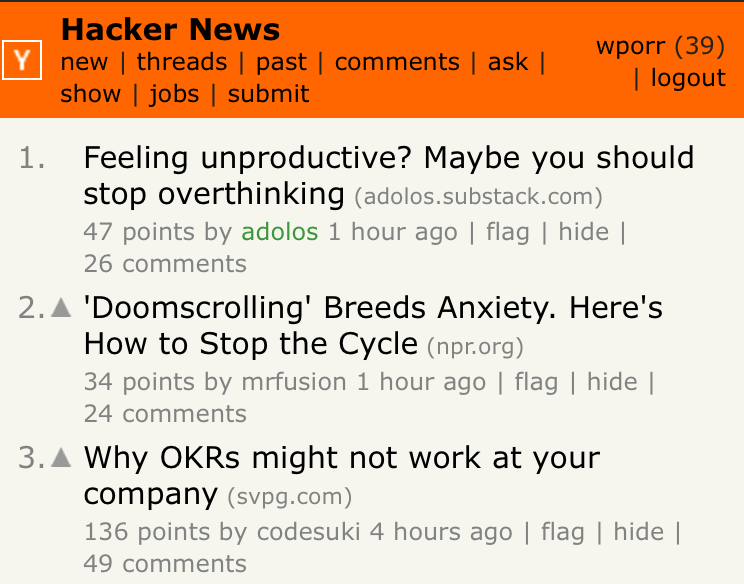

SCREENSHOT / LIAM PORR

The trick to generating content without the need for editing was understanding GPT-3’s strengths and weaknesses. “It’s quite good at making pretty language, and it’s not very good at being logical and rational,” says Porr. So he picked a popular blog category that doesn’t require rigorous logic: productivity and self-help.

From there, he wrote his headlines following a simple formula: he’d scroll around on Medium and Hacker News to see what was performing in those categories and put together something relatively similar. “Feeling unproductive? Maybe you should stop overthinking,” he wrote for one. “Boldness and creativity trumps intelligence,” he wrote for another. On a few occasions, the headlines didn’t work out. But as long as he stayed on the right topics, the process was easy.

After two weeks of nearly daily posts, he retired the project with one final, cryptic, self-written message. Titled “What I would do with GPT-3 if I had no ethics,” it described his process as a hypothetical. The same day, he also posted a more straightforward confession on his real blog.

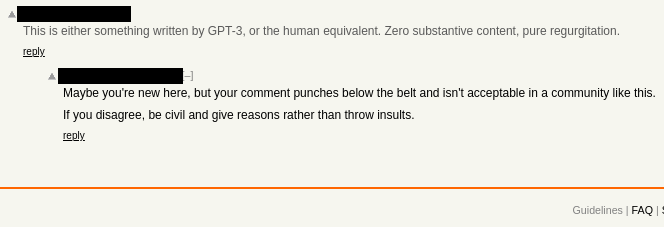

SCREENSHOT / LIAM PORR

Porr says he wanted to prove that GPT-3 could be passed off as a human writer. Indeed, despite the algorithm’s somewhat weird writing pattern and occasional errors, only three or four of the dozens of people who commented on his top post on Hacker News raised suspicions that it might have been generated by an algorithm. All those comments were immediately downvoted by other community members.

For experts, this has long been the worry raised by such language-generating algorithms. Ever since OpenAI first announced GPT-2, people have speculated that it was vulnerable to abuse. In its own blog post, the lab focused on the AI tool’s potential to be weaponized as a mass producer of misinformation. Others have wondered whether it could be used to churn out spam posts full of relevant keywords to game Google.

Porr says his experiment also shows a more mundane but still troubling alternative: people could use the tool to generate a lot of clickbait content. “It’s possible that there’s gonna just be a flood of mediocre blog content because now the barrier to entry is so easy,” he says. “I think the value of online content is going to be reduced a lot.”

Porr plans to do more experiments with GPT-3. But he’s still waiting to get access from OpenAI. “It’s possible that they’re upset that I did this,” he says. “I mean, it’s a little silly.”