Even though the high-value data is now available, still organizations don’t use about 43% of their accessible data properly. Even more, they only use 57% of the data they normally collect. Without having a way to extract all different types of data, and unorganized or poorly structured data is keeping organizations from reaching the full potential of available information. Ultimately, making the right decisions becomes harder.

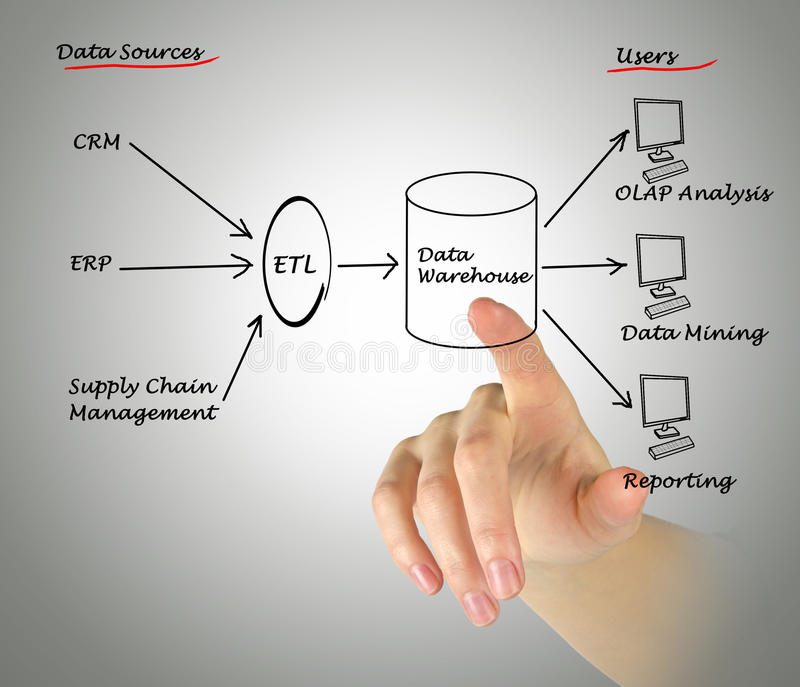

It is a process of procuring data from a source to move it in a new context either cloud-based, on-site or a hybrid solution. Multiple strategies are commonly applied for data extraction but the process is complex more often. It is the first step in the ELT extraction process, transformation, and loading. It means data always goes under further processing after completion of initial retrieval.

There are different reasons to perform data extraction. These include:

Data formatted to a certain standardized model to make it ready for further analysis is called structured data. You can use logical data extraction to extract structured data.

The process of structured data extraction breaks down into two following categories:

Arguably, unstructured data extraction is a complex process. It is because the types of data lying under this group are commonly highly varied. Some data source examples for this category include spool files, text documents, web pages, emails, etc.

However, it is always important to remember that information from these sources is also highly valuable. Therefore, the capacity to process and extract unstructured data is as important as structured data. However, to make this data ready for analysis, you require more processes beyond extraction.

Data extraction aka web scraping is a process of taking data from one given source to another context. However, data mining is a data discovery process from databases.

While extraction is simply the movement of data, however, mining actually entails qualitative analysis of data. Mining can let you survey data methodically to find overseen patterns, insights, and relationships, or fraudulent activity as well.

Another key difference is that data mining requires data to be structured and clean-up while extraction can be performed on any data type.

Here we have enlisted some of the most common business challenges when it comes to data extraction:

There are various tools of data extraction that you can consider to bring ease to the process. These tools include:

This is an AI-powered data extraction tool that ensures simple visual operation. It is compatible with different operating systems including Linux, Mac, and Windows. It can recognize different data entities automatically. While the flowchart mode of the tool can bring more ease when you need to develop complex scraping rules.

This is a self-service tool that requires no coding. You can connect it to different data sources easily. It comes up with above 80 built-in functions to cleanse and process data at a higher speed and with no errors. So, you don’t have to waste a lot of time in making your data readable.

This tool can provide cloud-based data processing services for contracts, passports, invoices, etc. The conversion speed for most of the documents is about 5 seconds only with this tool. You can also complete the processes of data classification and manipulation around the clock online.

Here is another open-source, free, add-on extension for MS Excel. The tool specializes in analyses of social networks. However, it doesn’t offer a data integration feature. However, this can offer you additional features like advanced network metrics, sentimental analysis, report generation.

The nature of qualitative data extraction has a risk of posing different obstacles to procure accurate samples. So, it is important to have minimal skills in data manipulation and coding. Data’s manual tagging is possible but this is a labor-intensive procedure. Even more, it can also lead to lower accuracy when working without larger datasets. Due to which small to mid-sized businesses will have a tough time accessing and extracting adequate data.

It is because small datasets analysis can lead to outliers, overfitting, and high dimensionality. So, it is always better to invest in the right tool, otherwise extracting data can lead to inaccurate interpretations.

Effective data analysis is key to business intelligence optimization. To make it happen, automatic data processing is an excellent solution. However, data extraction is just an initial stage of data analysis. However, you must couple this technique with modification, classification, and sophisticated analysis as well. Fortunately, you can achieve all this by choosing the right AI-powered solution. This will not only help you to improve your efficiency but also keep you from performing repetitive tasks.

Understanding the common causes of delays during facility relocations can save you time, money, and…

If you or someone you know suffers from sleep apnea, chances are a CPAP (Continuous…

Learn about four effective methods hospitals can implement to share patient status updates, ensuring clear…

Learn about the benefits of an organized outdoor shed. Discover easy methods to reduce clutter…

Want to fly multiple flags but are worried it might be disrespectful? Here’s what you…

Prepare for power outages with tips on staying warm, safe, and stress-free. Learn how to…